Stream ChatGPT Responses Using LangChain

In this post, we’ll dive into how to use LangChain to stream real-time responses from ChatGPT.

1. Installation

First, install langchain along with its OpenAI extension, langchain-openai.

pip install langchain langchain-openai-

The versions used in this post are as follows:

$ pip list | grep langchain langchain 0.2.12 langchain-core 0.2.29 langchain-openai 0.1.21 langchain-text-splitters 0.2.2

2. Setting Up Your API Key

Next, create a .openai file in your working directory and store your API key in it. Set the OPENAI_API_KEY environment variable using the following code:

import os

with open('.openai') as f:

os.environ['OPENAI_API_KEY'] = f.read().strip()If you’re using Google Colab, it’s more convenient to use the “Secrets” feature instead of storing the API key in a file.

You can now safely store your private keys, such as your @huggingface or @kaggle API tokens, in Colab! Values stored in Secrets are private, visible only to you and the notebooks you select. pic.twitter.com/dz9noetUAL

— Colaboratory (@GoogleColab) November 1, 2023

3. Initializing the LLM

Now, let’s set up the LLM. In this example, we’ll use GPT-4o mini (gpt-4o-mini-2024-07-18), which is a cost-effective alternative to GPT-3.5 Turbo.

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model='gpt-4o-mini-2024-07-18', temperature=0)4. Streaming Real-Time Responses

To stream responses in real time, use the stream method. Here’s how you can display ChatGPT’s response directly on the screen:

for chunk in llm.stream("Explain LangChain for beginners."):

print(chunk.content, end="", flush=True)-

Output (The following content will be streamed in real-time):

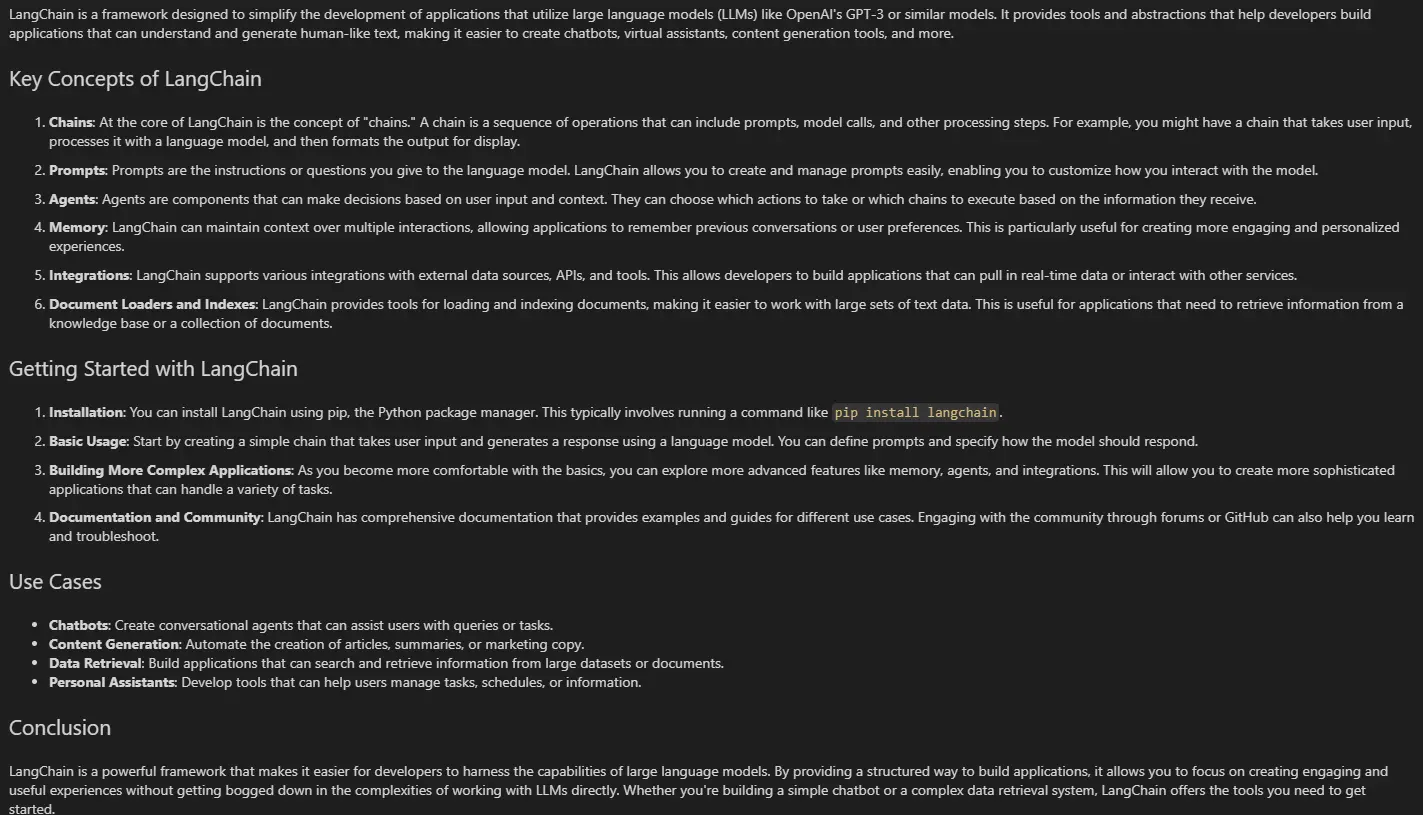

LangChain is a framework designed to simplify the development of applications that utilize large language models (LLMs) like OpenAI's GPT-3 or similar models. It provides tools and abstractions that help developers build applications that can understand and generate human-like text, making it easier to create chatbots, virtual assistants, content generation tools, and more. ### Key Concepts of LangChain 1. **Chains**: At the core of LangChain is the concept of "chains." A chain is a sequence of operations that can include prompts, model calls, and other processing steps. For example, you might have a chain that takes user input, processes it with a language model, and then formats the output for display. 2. **Prompts**: Prompts are the instructions or questions you give to the language model. LangChain allows you to create and manage prompts easily, enabling you to customize how you interact with the model. 3. **Agents**: Agents are components that can make decisions based on user input and context. They can choose which actions to take or which tools to use based on the information they receive. This is useful for creating more dynamic and responsive applications. 4. **Memory**: LangChain can maintain context over multiple interactions, which is essential for applications like chatbots that need to remember previous conversations. This memory feature allows for more coherent and contextually aware interactions. 5. **Tooling**: LangChain provides integrations with various tools and APIs, allowing your application to perform tasks beyond just text generation. For example, it can call external APIs, access databases, or perform calculations. ### Getting Started with LangChain 1. **Installation**: You can install LangChain using pip, the Python package manager. This typically involves running a command like `pip install langchain`. 2. **Basic Usage**: To use LangChain, you typically start by defining a prompt and then creating a chain that processes that prompt with a language model. You can then run the chain with user input to get a response. 3. **Building Applications**: As you become more familiar with LangChain, you can start building more complex applications by combining chains, using agents, and integrating memory. You can also customize prompts and responses to fit your specific use case. ### Example Use Cases - **Chatbots**: Create conversational agents that can answer questions, provide recommendations, or assist with tasks. - **Content Generation**: Automate the creation of articles, blog posts, or marketing copy based on user input or predefined topics. - **Data Analysis**: Use language models to analyze and summarize data, generate reports, or extract insights from text. ### Conclusion LangChain is a powerful tool for developers looking to leverage the capabilities of large language models in their applications. By providing a structured way to build and manage interactions with these models, LangChain makes it easier to create sophisticated applications that can understand and generate human-like text. Whether you're building a simple chatbot or a complex content generation system, LangChain offers the tools you need to get started.

5. Using in Jupyter Notebook

If you’re working in Jupyter Notebook or Google Colab, you can use Markdown from IPython.display to display the response in a clean Markdown format:

from IPython.display import Markdown, display, update_display

contents = ""

display_id = None

for chunk in llm.stream("Explain LangChain for beginners."):

contents += chunk.content

markdown = Markdown(contents)

if not display_id:

display_id = display(markdown, display_id=True).display_id

else:

# Use update_display to prevent screen flickering during updates

update_display(markdown, display_id=display_id)-

Output (The response will be displayed in real time):

6. Using in Streamlit

With Streamlit, you can easily create a simple application as shown below.

(For production use, it’s recommended to manage your API key with st.secrets.)

import os

import streamlit as st

from langchain_openai import ChatOpenAI

# Initialize the LLM

if "llm" not in st.session_state:

with open(".openai") as f:

os.environ["OPENAI_API_KEY"] = f.read().strip()

st.session_state.llm = ChatOpenAI(model="gpt-4o-mini-2024-07-18", temperature=0)

# Set the title

st.title("ChatGPT Streaming Test")

# Function that is called when text is entered into the text box

def stream_response():

user_input = st.session_state.user_input

if user_input:

response_text = ""

for chunk in st.session_state.llm.stream(user_input):

response_text += chunk.content

st.session_state.response_text = response_text

response_area.markdown(response_text)

# Initialize session state on first run

if "response_text" not in st.session_state:

st.session_state.response_text = ""

# Create a text box and execute stream_response when Enter is pressed

st.text_input("Ask a question to ChatGPT:", key="user_input", on_change=stream_response)

# Create an empty placeholder and display the previous response from the session state

response_area = st.empty()

if st.session_state.response_text:

response_area.markdown(st.session_state.response_text)-

Output (The response is displayed in real-time)