Exploring LangChain's Quickstart (5) - Serve as a REST API (LangServe)

Series - Exploring LangChain's Quickstart

Contents

This series dives into how to use LangChain, based on the LangChain Quickstart guide.

In this post, we’ll explore how to deploy LangChain agents as a REST API using LangServe.

Recap of the Previous Post

In our last post, we created an agent that combines tools for answering LangChain-related queries with internet search capabilities.

Here’s a recap of the code we used:

import os

from langchain import hub

from langchain.agents import AgentExecutor, create_openai_functions_agent

from langchain.tools.retriever import create_retriever_tool

from langchain_community.document_loaders import WebBaseLoader

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_community.vectorstores import FAISS

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain_text_splitters import RecursiveCharacterTextSplitter

# Set up the API key as environment variable

with open(".openai") as f:

os.environ["OPENAI_API_KEY"] = f.read().strip()

# Load web page content

loader = WebBaseLoader("https://python.langchain.com/docs/get_started/introduction")

docs = loader.load()

# Load embeddings

embeddings = OpenAIEmbeddings()

# Split the documents

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(docs)

# Vectorize documents and create a vector store

vector = FAISS.from_documents(documents, embeddings)

# Create a retriever

retriever = vector.as_retriever()

# Search tool for document retrieval

retriever_tool = create_retriever_tool(

retriever,

"langchain_search",

"A search tool for LangChain-related queries. Use this for questions about LangChain!",

)

# Set Tavily API key

with open(".tavily") as f:

os.environ["TAVILY_API_KEY"] = f.read().strip()

# Internet search tool

search = TavilySearchResults()

# List of tools in use

tools = [retriever_tool, search]

# Get template from LangChain Hub

template = hub.pull("hwchase17/openai-functions-agent")

# Create an agent

llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0)

agent = create_openai_functions_agent(llm, tools, template)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)9. Operating as a REST API (LangServe)

9.1. Installing LangServe

First, install LangServe with the following command:

pip install "langserve[all]"

9.2. Creating serve.py

Create a file named serve.py with the following content:

import os

from typing import List

from fastapi import FastAPI

from langchain import hub

from langchain.agents import AgentExecutor, create_openai_functions_agent

from langchain.pydantic_v1 import BaseModel, Field

from langchain.tools.retriever import create_retriever_tool

from langchain_community.document_loaders import WebBaseLoader

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_community.vectorstores import FAISS

from langchain_core.messages import BaseMessage

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langserve import add_routes

# Set up the API key as environment variable

with open(".openai") as f:

os.environ["OPENAI_API_KEY"] = f.read().strip()

# Load web page content

loader = WebBaseLoader("https://python.langchain.com/docs/get_started/introduction")

docs = loader.load()

# Load embeddings

embeddings = OpenAIEmbeddings()

# Split the documents

text_splitter = RecursiveCharacterTextSplitter()

documents = text_splitter.split_documents(docs)

# Vectorize documents and create a vector store

vector = FAISS.from_documents(documents, embeddings)

# Create a retriever

retriever = vector.as_retriever()

# Search tool for document retrieval

retriever_tool = create_retriever_tool(

retriever,

"langchain_search",

"A search tool for LangChain-related queries. Use this for questions about LangChain!",

)

# Set Tavily API key

with open(".tavily") as f:

os.environ["TAVILY_API_KEY"] = f.read().strip()

# Internet search tool

search = TavilySearchResults()

# List of tools in use

tools = [retriever_tool, search]

# Get template from LangChain Hub

template = hub.pull("hwchase17/openai-functions-agent")

# Create an agent

llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0)

agent = create_openai_functions_agent(llm, tools, template)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

# Create an app

app = FastAPI(

title="LangChain Server",

version="1.0",

description="A simple API server using LangChain's Runnable interfaces",

)

# Adding routes (OpenAI)

add_routes(

app,

llm,

path="/openai",

)

# Adding routes (Agent)

# (As the current AgentExecutor does not have defined schemas,

# you need to set up its input/output schemas.)

class Input(BaseModel):

input: str

chat_history: List[BaseMessage] = Field(

...,

extra={"widget": {"type": "chat", "input": "location"}},

)

class Output(BaseModel):

output: str

add_routes(

app,

agent_executor.with_types(input_type=Input, output_type=Output),

path="/agent",

)

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="localhost", port=8000)

9.3. Running serve.py

Execute serve.py to launch the application that will serve your REST API:

python serve.py9.4. Using the REST API

-

To use the newly created REST API from Python:

from langserve import RemoteRunnable remote_chain = RemoteRunnable("http://localhost:8000/agent/") remote_chain.invoke({ "input": "What is LangChain?", "chat_history": [] })-

Output

{'output': 'LangChain is a framework for developing applications powered by large language models (LLMs). It simplifies every stage of the LLM application lifecycle, including development, productionization, and deployment. The framework consists of open-source libraries such as langchain-core, langchain-community, langchain, langgraph, and langserve. LangChain also includes LangSmith, a developer platform for debugging, testing, evaluating, and monitoring LLM applications. It offers various use cases, an Expression Language (LCEL), and a comprehensive ecosystem of tools and integrations.'}

-

-

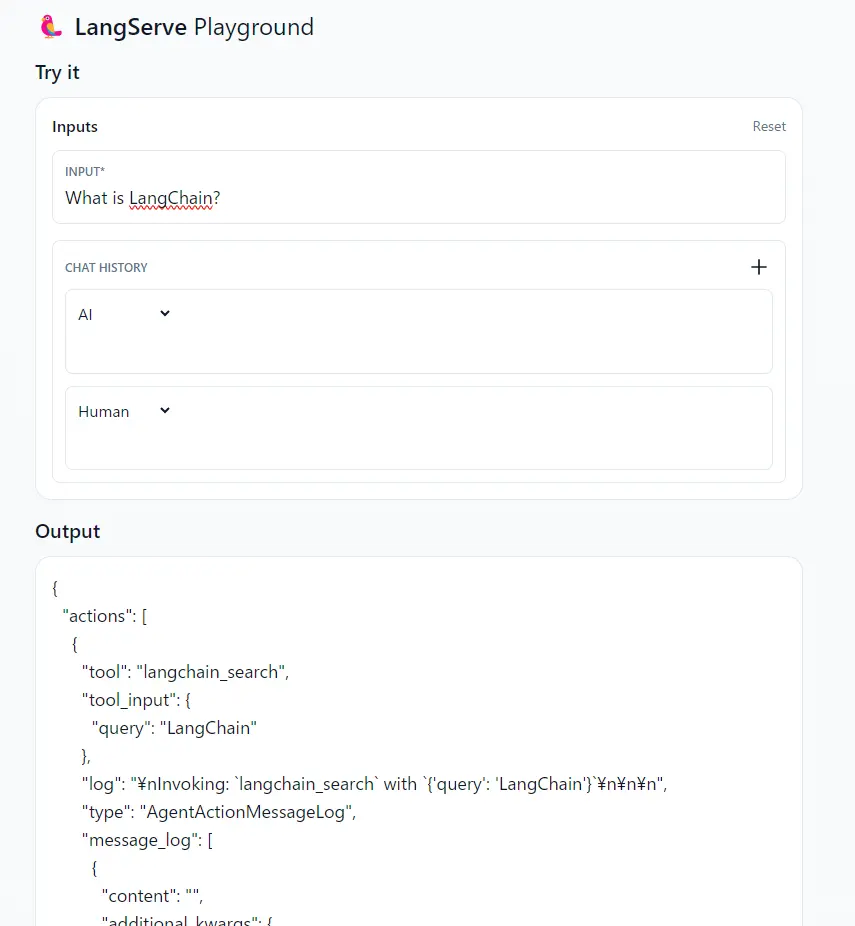

To test the API from a web browser, navigate to http://localhost:8000/agent/playground/.

Here, you can interact with the API via the Playground: